COOPERATION MODEL

ARTIFICIAL INTELLIGENCE

PRODUCT ENGINEERING

DevOps & Cloud

LOW-CODE/NO-CODE DEVELOPMENT

USE CASES

FRONTEND DEVELOPMENT

CLOUD DEVELOPMENT

MOBILE APP DEVELOPMENT

LOW CODE/ NO CODE DEVELOPMENT

EMERGING TECHNOLOGIES

Last year, in the course of working on a client’s cloud project, we noticed their AWS bill was higher than it needed to be. We looked into it to figure out why, and we realized their infrastructure wasn’t properly optimized. There were several idle instances, over-provisioned resources, and unnecessary services—all driving up costs.

This is not a rare case. Many businesses unknowingly do this, spending their money on unnecessary services. The cloud could be an expensive matter for beginners migrating without a proper understanding of what services they actually need.

In the case of AWS, it becomes more important. AWS is a comprehensive cloud platform with more than 300 services. It empowers the world’s renowned companies with a world-class environment to run their services and innovate. With over 30% of the cloud market share, it offers a strong foundation for innovation to millions of businesses across the globe.

It has a pay-as-you-go model, meaning users pay only for what they need instead of paying upfront for a quarterly/yearly subscription. Undoubtedly, it is a lucrative pricing model, ensuring fair pricing and saving businesses from investing hefty amounts upfront. Many major businesses neglect to analyze their resource usage deeply, leading to potential cost savings left on the table.

VentureBeat reports that nearly half of businesses struggle to control cloud costs. [VentureBeat]

Another report from HashiCorp-Forrester shows that 94% of enterprises experience avoidable cloud expenses due to factors like underused resources (66%), overprovisioned resources (59%), and skill shortages (47%).

For more than 80% of organizations, cloud cost control was a major challenge. [CIO]

It’s easy to overspend on cloud services without a proper plan. Plus, cloud costs are going up year by year; we really need to watch how we use cloud technology in the right way to keep expenses down.

There are several reasons why cloud overspending is common among major business owners. First, companies often lack dedicated cloud experts, failing to utilize cloud services effectively even though changes in some services can lead to significant cost reduction.

In this blog, we will talk about how to optimize AWS cost, plus cover the tools you can use to further reduce your cloud bills.

Here is what you'll gain:

Practical tools and tactics for optimizing resource usage.

Actionable checklists to track progress and ensure cost efficiency.

Thought-provoking strategies to maximize savings potential.

As an AWS development company, we help you unlock the full value of the cloud for your business. From initial planning to post-migration support, our team provides end-to-end guidance to ensure long-term success and a seamless transition to the cloud.

It refers to the strategy consisting of best practices, tools, and techniques to minimize cloud expenses. From choosing the right-sized instances to utilizing cost-effective storage services, cloud consultants develop a fool-proof blueprint involving tools and techniques to identify and eliminate unnecessary resource usage.

This helps minimize expenses without sacrificing performance. Failing to implement such a strategy can lead to overspending on resources that are not fully utilized.

Since AWS charges based on usage, costs can spiral if resources are left unchecked. As an AWS development company, we have found below the common factors that affect overall cloud cost.

Unplanned spending on AWS usually doesn't pop up if you understand the nuances of the AWS platform deeply. Often, it comes from specific things we miss when managing our cloud environment. We've found three main areas that businesses should really keep an eye on to make sure they're not paying for services they don't need.

The Amazon Web Services platform is comprehensive, so big with over 300 services. It means that if you don't have a team looking after it, costs can go up. One reason is that how AWS charges can be tricky. You will have to choose between different pricing models, like paying as you go, reserved, and spot instances; each one has its own pros and cons.

A developer might start up a beefy EC2 server for a quick test and then just forget to turn it off. Or your team might not realize there's a cheaper way to run things that could save you a lot of money. This is where having experts who know the nuances of the AWS platform comes in handy. One reason is that you're basically guessing if you don't, and you're more likely to set up irrelevant services or too many of them.

As a well-known AWS development company, Brilworks offers services to help small and medium-sized businesses save money on AWS. If you need your setup looked at to make it more efficient, you can get in touch with us.

As your AWS setup with multiple accounts, regions, or interconnected services like Lambda, RDS, and S3 grows, it may be harder to track what’s running and why.

For example, you might have an S3 bucket quietly storing old data with no lifecycle policy, racking up charges, or an Auto Scaling group that’s overprovisioned because demand patterns shifted. The more sprawling your environment, the easier it is for unused resources to hide in plain sight.

AWS has more than 300 services; it is a blessing and a curse at the same time. You have so many options, yet there are tons of ways to overspend. It is often seen that an inexperienced team's experiments cause huge expenses as many services are left running.

Sometimes, many companies often buy premium services in place of a cheaper alternative that could have worked well. So, the sheer volume of services can make things complicated, and if the team doesn't have in-depth knowledge of AWS services, uninformed spending occurs.

The following practices will help you optimize your cloud environment.

Stop paying for the services you’re not using. This part is often overlooked. Idle EC2 instances, unattached EBS volumes, or old S3 buckets can increase spending.

For instance, a company might spin up an EC2 instance for a one-off test and forget to turn it off, leaving it running for months at $20-$50 a pop. AWS Cost Explorer can show you usage patterns—say, an instance with 0% CPU activity for weeks—while Trusted Advisor flags orphaned resources like EBS volumes not linked to anything.

A quick audit might reveal you’re spending $100 a month on a forgotten load balancer or an S3 bucket from a scrapped project. Deleting or stopping these is instant savings with zero impact on your operations.

Overprovisioning is a classic trap, picking an instance like a t3.large when a t3.small would do. Use AWS Compute Optimizer to go over your instance usage (CPU, memory, network) and downsize where possible.

Let’s say you are running a small web app on a $40/month instance when a $10/month one handles the traffic just fine. The $360 a year wasted per instance. Right-sizing isn’t guesswork; it’s data-driven.

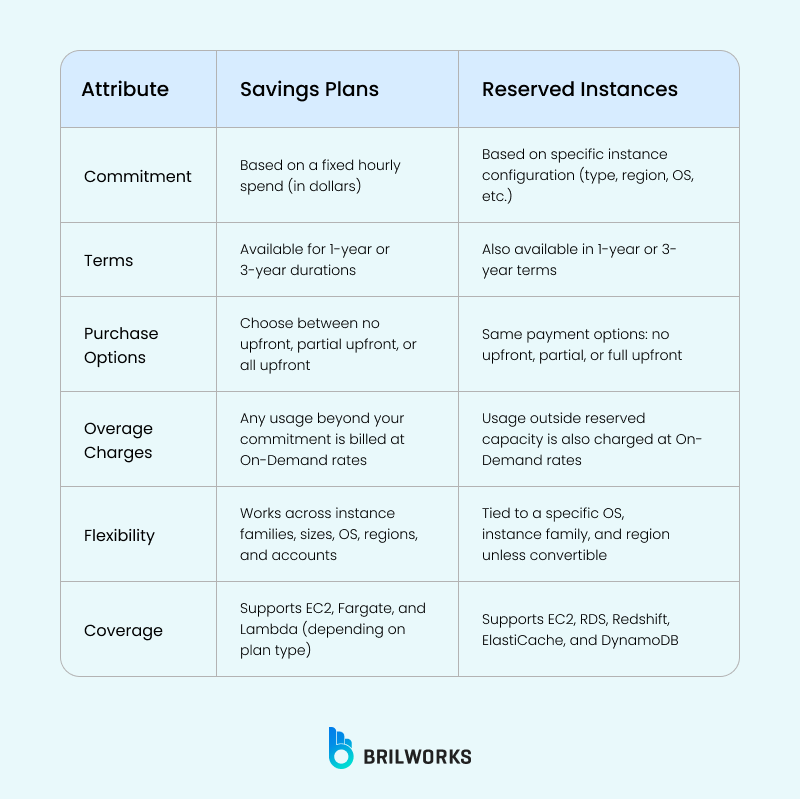

AWS’s On-Demand pricing is convenient but pricey. Think of it as paying full retail for something you use daily. Savings Plans and Reserved Instances (RIs) offer discounts of 30-60% for committing to consistent usage over 1-3 years.

For example, a business running a customer portal 24/7 could lock in a Savings Plan for their EC2 and Fargate usage, dropping costs from $1,000 to $600 a month. RIs work similarly but are more specific to instance types.

The catch? You need predictable workloads. AWS Cost Explorer’s “Coverage Reports” show how much of your usage could benefit, so you’re not blindly guessing. It’s like bulk-buying your cloud resources at a discount.

Spot Instances let you bid on spare AWS capacity at up to 90% off, ideal for flexible, interruptible jobs like data analysis or CI/CD pipelines. Say you’re processing logs: an On-Demand instance might cost $0.10/hour, but a Spot Instance could be $0.02/hour.

The trade-off is that AWS can reclaim them with a 2-minute warning, so you need fault-tolerant apps—think batch jobs or rendering tasks. Check Spot Advisor to see which instance types are least likely to be interrupted, and you’ve got a cheap, powerful option.

Paying for capacity you don’t need is not a great move. Auto Scaling adjusts your services based on usage—increase capacity for a traffic spike (like Black Friday) and scale down when it’s quiet. A retail app might run 10 instances during a sale but only 2 overnight.

Without Auto Scaling, you’re stuck at 10 all the time, wasting money. Set it up with CloudWatch metrics (e.g., CPU above 70% triggers a scale-out), and you’re covered. One e-commerce client saved 40% by scaling dynamically instead of overprovisioning for peak load year-round.

Storage can be a silent budget killer if you’re not proactive. S3 buckets, for instance, might hold logs or backups you haven’t touched in years, costing $0.023/GB/month when they could be $0.004/GB/month in Glacier.

Set up S3 Lifecycle Policies to automatically shift data to cheaper tiers or delete it after a set period—say, moving 6-month-old logs to Glacier Deep Archive. Review your storage with S3 Analytics to see what’s worth keeping or cutting.

Without visibility, you’re guessing who’s spending what. Tagging resources (e.g., “Project: Marketing” or “Env: Dev”) and using AWS Budgets gives you control. You can see if the dev team’s racking up $500 on untagged instances or if a test environment’s gone wild.

Data transfer costs can blindside you—moving 100GB out of S3 to the internet costs $9, but keeping it in-region is free. Use CloudFront to cache content closer to users, cutting egress fees and speeding up delivery. Check Cost Explorer’s “Data Transfer” section to spot patterns—like excessive cross-region replication—and tweak your architecture to keep data local where possible.

Running an EC2 instance 24/7 for a small task is overkill when AWS Lambda charges only for execution time. Say you’ve an image-resizing job: EC2 might cost $20/month to stay on, while Lambda runs it for $2/month based on usage. It’s perfect for sporadic workloads—no idle costs.

Check if your app has event-driven pieces that could go serverless—it’s not universal, but it’s amazing where it fits.

Costs can go up if you’re not watching. Use AWS Cost Explorer to check your bill. It allows you to filter by service, region, or tag to spot trends or surprises. Moreover, it is a good idea to set a recurring calendar reminder to check monthly and look at usage spikes or services you don’t recognize. Pair it with Trusted Advisor for quick wins like “delete this idle resource.”

Below is a curated list of AWS cost optimization tools to optimize your AWS infrastructure. We will focus on their key features and how they help you save money. We have picked up both native AWS and third-party options.

You can spot trends—like a sudden spike in EC2 costs from an unused test instance—and act on specific savings suggestions, such as buying RIs to cut costs by up to 60%. For example, a company might use it to find they’re spending $500/month on underused S3 storage and adjust accordingly.

Provides detailed visualizations of your AWS spending and usage over the past 13 months, with forecasting for up to 12 months ahead.

Offers customizable reports to break down costs by service, region, or tag, helping you pinpoint high-spend areas.

Includes Reserved Instance (RI) and Savings Plans recommendations based on your usage patterns.

It’s like a free consultant flagging waste—say, $200/month on unused load balancers—and nudging you to fix it. One business caught a $300/month overprovisioned RDS instance this way and scaled it down without downtime.

Delivers real-time recommendations across cost optimization, security, and performance, based on AWS best practices.

Identifies idle resources (e.g., unattached EBS volumes or low-utilization EC2 instances) and suggests actions like deletion or downsizing.

Monitors service limits to prevent overprovisioning.

It takes the guesswork out of right-sizing. A team running a web app might find they’re using a $50/month c5.large when a $20/month t4g.small works better, saving $360/year per instance with no performance hit.

Uses machine learning to analyze workload patterns and recommend optimal EC2 instance types, sizes, and Auto Scaling configurations.

Suggests Graviton-based instances for up to 40% better price-performance.

Provides cost-saving estimates alongside performance impacts.

It’s proactive cost control. A startup might set a $500/month budget and get alerted when a dev environment pushes them over, letting them shut it down before the bill balloons.

Lets you set custom cost and usage budgets with alerts triggered at thresholds (e.g., 80% of $1,000/month).

Tracks spending by service, tag, or account, with forecasts based on historical data.

Integrates with actions like stopping EC2 instances when limits are breached.

It catches costly mistakes fast—like a $1,000/day spike from a misconfigured Lambda function—and lets you fix them before the damage grows. One user traced a $700 anomaly to an unintended cross-region data transfer.

Employs machine learning to monitor spending patterns and flag unusual spikes (e.g., a 50% jump in one day).

Provides root cause analysis, linking anomalies to specific services or regions.

Sends customizable alerts via email or Slack.

Perfect for cutting waste in dev/test setups. A company running 10 EC2 instances at $20/month each could save $1,200/year by shutting them down outside 9-5 hours.

Automates start/stop schedules for EC2 and RDS instances based on usage needs (e.g., off at night).

Supports tagging to apply schedules across multiple resources.

Reduces costs for non-production environments without manual intervention.

It tackles storage bloat. A firm paying $300/month for old S3 data could shift it to Glacier, dropping costs to $50/month—a $3,000/year saving.

Offers a dashboard with insights into S3 usage and costs across buckets, regions, and storage classes.

Recommends lifecycle policies to move data to cheaper tiers (e.g., Glacier at $0.004/GB vs. Standard at $0.023/GB).

Highlights cost anomalies like excessive API requests.

It’s your eyes on efficiency. A team might set an alarm for EC2 CPU under 20%, find a $30/month instance is overkill, and downsize it, saving $15/month per instance.

Monitors resource metrics (e.g., CPU, network) and sets alarms for low utilization or cost thresholds.

Integrates with Auto Scaling and Lambda for automated responses to usage changes.

Logs detailed usage data for cost analysis.

Each tool targets a core aspect of cost optimization:

Visibility (Cost Explorer, Cloudability)

Waste reduction (Trusted Advisor, Instance Scheduler)

Resource efficiency (Compute Optimizer, S3 Storage Lens)

Proactive control (Budgets, Cost Anomaly Detection)

Automation (CloudWatch).

Together, they form a toolkit to tackle the complexity of AWS pricing and usage. For instance, combining Cost Explorer’s insights with Instance Scheduler’s automation could save a mid-sized firm thousands annually by identifying and pausing idle resources—real savings backed by real data.

Pick the ones that match your needs—start with AWS’s free natives for basics, then layer in third-party tools if you need deeper analytics or automation. Either way, you’re equipped to stop overpaying and start optimizing.

AWS offers a variety of pricing models to suit different usage patterns and budget needs. Here's a breakdown of the key models:

This is the default model, offering flexibility but coming at a higher cost. You pay for resources as you use them, billed per second. While convenient, it's generally best for mission-critical workloads or those with unpredictable spikes in demand. For more consistent usage, other models can be more cost-effective.

These plans offer significant discounts (up to 72%) compared to On-Demand prices in exchange for a commitment to a consistent amount of usage over a one- or three-year term.

AWS offers three types of Savings Plans:

Compute Savings Plans: Apply to usage across Amazon EC2, AWS Lambda, and AWS Fargate.

EC2 Instance Savings Plans: Offer the highest discounts but are less flexible, as they apply to specific instance types within a region.

Amazon SageMaker Savings Plans: Optimize costs for SageMaker usage.

Organizations like Airbnb, Comcast, and Upwork have successfully reduced their AWS costs by utilizing Savings Plans. Carefully evaluating your usage patterns and choosing the right pricing model can lead to significant savings on your AWS bill.

This model provides access to unused EC2 capacity at steep discounts (up to 90% off On-Demand prices). However, instances can be interrupted if capacity is needed elsewhere. Spot Instances are ideal for workloads that can tolerate interruptions, such as batch processing, data analysis, and testing.

Reserved Instances (RIs) offer a powerful solution. You can save up to 75% compared to on-demand pricing while guaranteeing access to the resources you need when it matters most. This not only helps your wallet but also provides peace of mind for mission-critical tasks or unpredictable spikes in demand.

By optimizing your RI usage and exploring other cost-saving tactics like right-sizing resources and turning off unused ones, you can unlock the true cost-cutting potential of the cloud and unlock a budget-friendly AWS experience.

Set detailed budgets for accounts, services (e.g., EC2, S3), and individual resources to monitor spending closely. You’ll get alerts when you hit thresholds.

Use AWS cost explorer. These tools will help you find out the last 13 months of usage and costs. With this, you can find trends and spike AWS service fees.

With machine learning capabilities, cost anomaly detection is a great tool that flags weird spending patterns.

Implement chargeback (or showback). Assign costs to teams or projects (e.g., marketing’s S3 bill). Chargeback bills them directly.

Match EC2 instance types to your workload.

Consider saving plans as this strategy can save your costs. When you commit to steady compute usage (e.g., $500/month) for 1-3 years, you can save 30-60% over On-Demand rates.

Leverage spot instances for flexible tasks like data processing. You can use them (like $0.02/hour vs. $0.10/hour) and save.

Instance scheduling (or auto start/stop EC2 or RDS instances).

Serverless services such as AWS Lambda or Fargate are perfect for irregular workloads.

Activate S3 intelligent tiering. It will. auto-shifts S3 data between tiers based on access—frequent to infrequent.

Leverage S3 glacier for instant retrieval.

Delete unused EBS snapshots and volumes.

Pick the right database (RDS for SQL, DynamoDB for NoSQL) and size it to your load.

Data leaving AWS (e.g., 100GB at $9) gets pricey. Use VPC endpoints or Direct Connect to keep it internal.

Label resources (e.g., “Project: App1”) for tracking in Cost Explorer. It shows who’s spending what. It will be easier to allocate costs and spot waste.

Check for unused EC2, RDS, or load balancers with CloudWatch or Trusted Advisor.

Ensure your RIs (e.g., $600/year for EC2) are fully used—Cost Explorer shows if you’re wasting coverage.

In this article, we have explored how to optimize AWS cloud cost using different tools and proven techniques. We trust you now have a clear understanding of how to effectively use the AWS platform. Implementing these practices can help you lower your cloud expenses. Furthermore, staying informed about the newest releases from cloud providers is beneficial, as these updates often enhance the experience for your cloud users.

As experienced AWS consultants, we specialize in delivering tailored AWS development services to businesses like yours. Whether you’re migrating to the cloud or refining an existing setup, we’re here to craft a cost-effective roadmap that fits your unique needs. Contact us today to explore how our AWS development services can optimize your cloud journey and hire AWS developer, and let’s build your success together.

Utilizing Savings Plans, Reserved Instances, and detailed budget planning with granular cost allocation can help you lock in predictable costs and avoid unexpected spikes. You can also start small with the free tier and scale up gradually as your needs grow. Alternatively, you can seek the assistance of a seasoned AWS service provider.

Go beyond just looking at the data. Deep dive into the recommendations provided by Cost Explorer and AWS Trusted Advisor. Implement actionable steps like right-sizing instances, exploring Spot Instances, and optimizing storage tiers. Additionally, consider adopting automated actions based on the insights gained from Cost Explorer.

Invest in managed services like Amazon DynamoDB or Amazon Redshift that handle resource provisioning and maintenance, reducing your operational burden. Additionally, consider utilizing third-party cloud cost optimization tools that offer automated recommendations and cost management features.

Yes, Spot Instances can be interrupted if capacity is needed elsewhere. However, you can mitigate this risk by utilizing interruption-aware workloads, running non-critical tasks on Spot Instances, and implementing fallback strategies.

Many valuable AWS tools like Cost Explorer and Trusted Advisor are available for free. You can also utilize open-source scripts and automation tools to optimize your environment without a significant upfront investment. Additionally, many MSPs offer cost-effective consulting services to help you get started with optimization strategies.

You might also like

Get In Touch

Contact us for your software development requirements