COOPERATION MODEL

ARTIFICIAL INTELLIGENCE

PRODUCT ENGINEERING

DevOps & Cloud

LOW-CODE/NO-CODE DEVELOPMENT

INDUSTRY

FRONTEND DEVELOPMENT

CLOUD DEVELOPMENT

MOBILE APP DEVELOPMENT

LOW CODE/ NO CODE DEVELOPMENT

EMERGING TECHNOLOGIES

AI will take over the world is something that I hear almost every day. Because why not? Currently, AI surrounds us with its astounding abilities. To tell a story, to do research, to create, to enhance, and to even write a formal e-mail. We are all utilising it and making the most of it. While many of us are guiding AI to hone our creativity and brainstorm, there are a bunch who think that artificial intelligence or Generative AI is not safe or can be misguided, etc.

It’s not wrong to take caution. However, this reminds us that in all the excitement, let’s not forget the misunderstandings about what AI can really do. But it is also true that there are AI misconceptions that turn people away from it. The best way to prevent something like this is to get knowledge. Chances are if you know what is right and what is wrong, then the consequences won’t be so dire as compared to those who don’t know anything.

First, we must face the fact that AI has completely changed the outlooks of leaders. It has made some changes in the past few years that we have not seen in decades. And as AI gets more popular in our work and personal lives, it’s important to talk about how to use it responsibly. This means making sure AI is developed in a fair way and thinking about how it will affect people and society.

The emerging trends of AI are often part of the conversation but not its misconceptions. In this blog, we will debunk some common Generative AI misconceptions. We’ll clear up the confusion and highlight how AI can be used for positive change.

Before we move to the AI myths, let's first talk about reality. Numbers do not lie. And some surveys are done that show the true potential of generative AI.

McKinsey just published its report on the state of AI. The numbers are not surprising. They noted that 78% of the respondents said that they use generative AI in at least one of their business functions. And out of those, 71% use it on a regular basis.

If we talk about who overlooks all the functions that utilize AI, then 28% responded that their CEO is responsible for AI governance in their organization. 13% of respondents say their organizations have hired AI compliance specialists, and 6% report hiring AI ethics specialists.

These numbers are pointing toward what AI is capable of. In just a few years, the adoption rates have gone through the roof, and the future looks bright.

So, in this fast-paced growth, knowing the lefts and rights of it can help you navigate it better.

For more detailed analysis and statistics, read our blog: The State of Generative AI in 2025

What you see is often not the whole truth. And this is true for almost every scenario, even for this. Most generative AI myths come from people believing what they see. Due to this, a chain effect takes place that leads to its misguided use. Let’s clarify this fog of confusion and dive into these misconceptions:

Here, we intentionally used the word “infallible,” which has a strong meaning. It means being incapable of making mistakes or never failing. This might be, I think, the most overlooked yet very common generative AI misconception.

There is no doubt that AI’s capacity to produce content on demand is remarkable. With a few prompts and descriptions, the tasks that before took days or a week are now done in hours. But this comes with a following question: How trustworthy and accurate is the output?

We get that when the work is done, you are the judge of it. But if I go and write into an AI chatbot that “give me statistics of AI adoption rate,” it will give me the answer. Hardly any chance that it will say “I don’t know.” It will not say that because it is designed or rather created to give an answer no matter what.

Moreover, to give answers, it has to sift through massive quantities of online data to respond to our prompts. Even though the algorithms excel at interpreting text, they do not have the capability to separate fact from fiction. This creates a substantial risk of generating inaccurate or misleading content.

Despite its impressive capabilities, relying solely on AI without human oversight can result in unverified and potentially deceptive outputs. These fabricated pieces of information are often referred to as “hallucinations”-meaning AI inventing details based on patterns in its data.

To safeguard against misinformation, human validation remains indispensable. It somehow comes down to us, to use it responsibly and possibly becoming a better “judge” of the output. AI is a powerful instrument, but it should be treated as just that - an instrument, not an infallible source of truth.

Generative AI possesses remarkable abilities. It learns at an astonishing pace, digesting vast amounts of information in mere seconds and producing content from simple prompts. Its efficiency in creating with minimal effort and time brings into question the very nature of creativity. However, the reality is more complex. AI is great at copying styles and creating content from patterns in its data. It can help make different versions of known themes or follow specific style rules. It can be a valuable asset for producing variations on established themes or adhering to specific style guidelines.

Yet, AI currently lacks the intangible qualities that define human creativity. It doesn't possess the intuitive understanding, emotional depth, and raw originality that fuel our capacity to create something truly novel. Human creativity is a multifaceted process, drawing upon our social experiences, cultural influences, and a deep well of emotions – aspects that current AI struggles to replicate.

Ultimately, AI is a powerful tool for remixing and reimagining existing ideas, but it's not yet capable of replacing the spark of human ingenuity.

Following the debut of OpenAI's ChatGPT, the focus has been on creating larger language models. GPT-2 had 1.5 billion parameters, GPT-3 had 175 billion, and a possible GPT-4 might have a trillion. Initially, generative AI prioritized bigger datasets, assuming that more data automatically leads to improved results.

However, recent research has challenged this assumption. New studies suggest that size alone does not guarantee better performance. In 2020, OpenAI's Kaplan et al. proposed "Kaplan's law," suggesting a positive relationship between model size and performance. However, a recent paper from Deepmind Research explores this further. It argues that the amount of training data (the individual pieces of text given to the model) is equally important.

This paper introduces Chinchilla, a model with 70 billion parameters - much smaller than its predecessors. Yet, Chinchilla was trained on four times the amount of data. The results are notable – Chinchilla surpasses larger models in certain areas like common sense and closed book question answering benchmarks.

This research highlights a significant change in how generative AI is trained. It suggests that concentrating solely on model size may not be the most effective strategy. The right balance between model setup and the quality and amount of training data is crucial for generative AI to reach its full potential.

LLM is short for "Large Language Model." These AI models learn from massive amounts of data, enabling them to understand and generate text that sounds human-like. However, the idea that one LLM can do everything we need for language tasks is still not a reality.

It's important to know that using just one language model (LLM) isn't always the best choice. LLMs are trained on vast amounts of data, allowing them to generate human-like text, summarize information, and even assist with coding. But each model is optimized for different strengths and purposes. For example, Gemini is designed for conversational AI, making it great for interactive discussions, while ChatGPT focuses more on structured responses and information retrieval. Similarly, Meta's LLaMA models prioritize research and efficiency over commercial applications.

Beyond general-purpose AI, specialized models are emerging to meet industry-specific needs. A legal document requires a different level of precision and formal tone compared to a marketing copy. A medical AI assistant needs to be trained with highly accurate, peer-reviewed health data, while an AI-powered creative writing tool may focus on style and storytelling.

Even within companies, multiple LLMs may be used together. A chatbot might use one model for customer support queries and another for internal business insights. Businesses often fine-tune smaller LLMs tailored to their domain, rather than relying on a massive general-purpose model that may not fully grasp industry nuances.

Instead of seeking "one LLM to rule them all," the future of AI points towards a multi-model approach—where different AI models complement each other, each excelling in its respective domain.

Many assume that generative AI tools are entirely free or come at a very low cost. While it’s true that companies offer free versions of AI models like ChatGPT, Gemini, and Claude, these versions usually come with significant limitations.

Generative AI models require constant maintenance, updates, and improvements. They need access to fresh training data to stay relevant, along with safeguards to prevent biased or inaccurate outputs. This continuous upkeep requires massive computational power, storage, and human oversight—all of which add to the cost.

For users who need advanced capabilities, the free versions are often not enough. Paid tiers, such as ChatGPT Plus ($20/month for GPT-4 access) or Microsoft’s CoPilot ($30/month), provide access to better models, faster response times, and additional features. Businesses that integrate AI into their operations often pay even more for API access, custom model fine-tuning, and cloud computing resources.

Beyond subscription fees, companies using generative AI at scale face hidden costs, such as higher cloud usage, security compliance, and additional human oversight to ensure responsible AI deployment. While AI can automate many tasks, it still requires human involvement to manage accuracy, ethical concerns, and potential biases.

Generative AI is gaining popularity in business, as big tech firms and other companies use AI to boost how well they work and get things done. It's crucial for business leaders to recognize that simply implementing AI doesn't automatically guarantee a competitive advantage. Businesses must use creativity in their AI strategies to effectively use AI to achieve their goals. The methods and timing of AI usage are equally important, as misusing it can put you behind your competitors.

A recent study by BCG revealed that 90% of participants utilizing GPT-4 for creative tasks experienced a significant 40% improvement in performance compared to those who didn't. However, those using it for business problem-solving saw a 23% decline.

Business leaders should be mindful of the challenges associated with implementing generative AI. Utilizing AI for tasks that align with its strengths, such as generating creative content, can unlock substantial benefits. However, forcing it into areas where human judgment and reasoning are essential, like complex problem-solving, can have negative consequences.

Generative AI might seem like a groundbreaking innovation that appeared overnight, but the reality is that it builds on decades of existing AI and machine learning research. While tools like ChatGPT and DALL·E have recently gained mainstream attention, the foundations of generative AI date back to earlier developments in natural language processing (NLP), neural networks, and deep learning.

For example, machine learning models have been used for text generation and translation since the early 2000s, and AI-powered image synthesis has been in development for years. The introduction of transformer-based architectures, like those behind GPT models, significantly improved AI’s ability to generate coherent and contextually relevant text, but it didn’t emerge from thin air.

Moreover, many generative AI models rely on pre-existing data and computational techniques rather than creating entirely new methods of learning. What’s truly different today is the scale and accessibility of these models, thanks to advances in computing power, cloud infrastructure, and vast datasets.

So, while generative AI feels revolutionary, it’s more of an evolution of past technologies rather than a completely new invention.

Read the entire timeline of generative AI in this blog: Evolution of Generative AI

The majority of people associate generative AI with the idea of chatbots like ChatGPT creating articles, or software like DALL·E creating digital images. While text and images are the most common uses people think of, generative AI is much more.

For instance, AI is being used for composing music pieces, with tools like Google’s MusicLM creating original music from written inputs. In the domain of creating videos, tools like Runway and Pika Labs can produce short AI-driven clips, shattering the boundaries of digital content creation. In the domain of coding and software development, AI tools like GitHub Copilot assist developers with code snippets and debugging concepts.

Besides creative pursuits, generative AI is also engaged in scientific research and medicine. AI can be utilized for the generation of new drug molecules, accelerating protein folding research, and helping design new materials. In finance, AI is utilized for predictive stock trend modeling, and for game development, it creates procedurally generated worlds and characters.

Thus, even though the most obvious outputs of generative AI are images and text, these are only the beginning. The technology is now moving into the realms that were long thought to be the exclusive domain of human expertise.

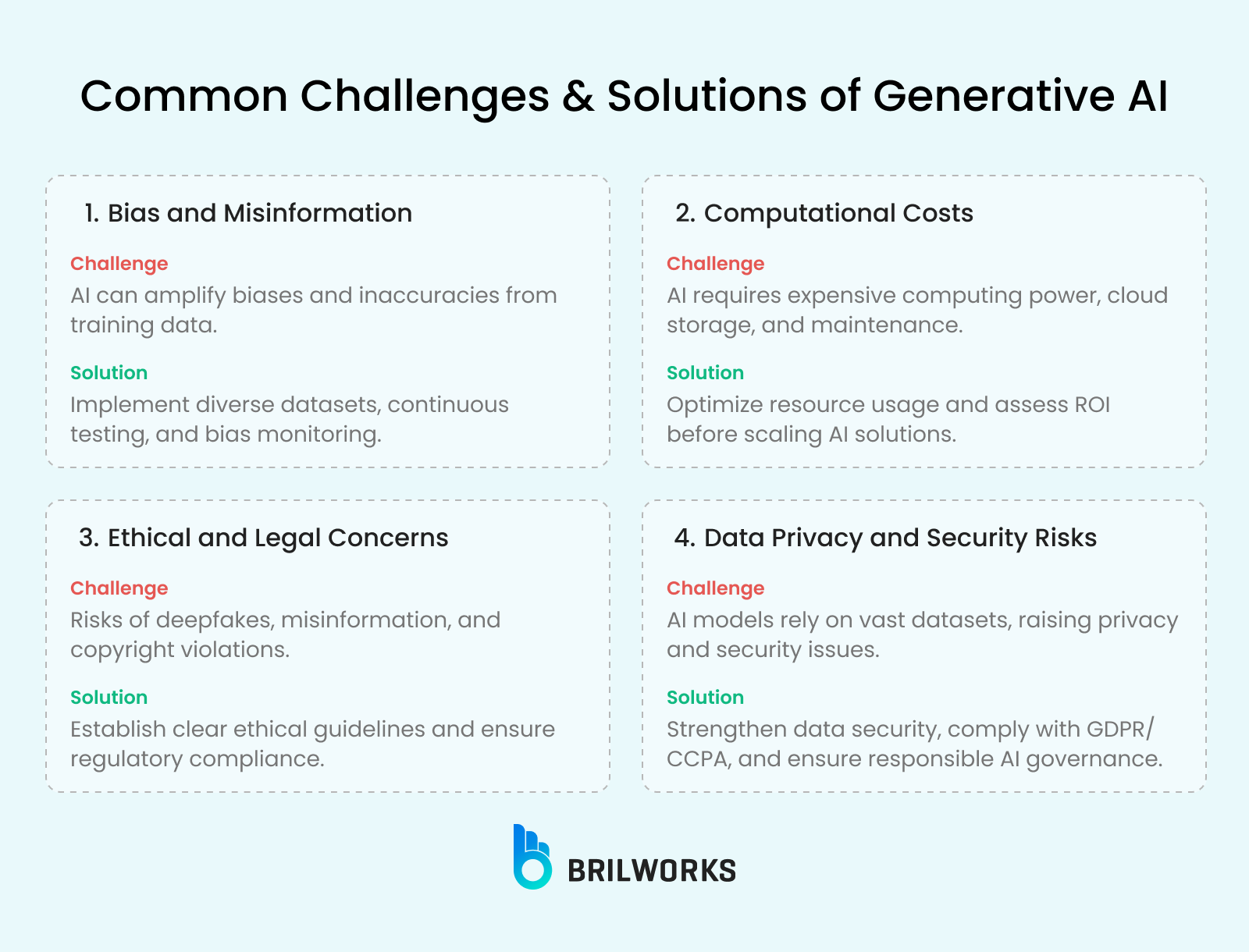

Traversing through generative AI policies has become so important because many new laws and regulations are in place. In recent years, the utilization of AI has grown profoundly. So the need for better and, more importantly, responsible use has grown with it. So, these policies have paved the way for that. They encounter some of the challanges that come with generative AI. Let’s look at some of the challenges:

The strength of the AI is only as good as the data it is trained on. If the data is inaccurate or biased, the AI will unintentionally amplify these, creating misleading or even harmful outputs. This is especially concerning for industries like hiring, lending, and law enforcement, since the AI outputs can carry real-life consequences. Organizations will be required to implement strong testing and monitoring to offset these risks.

Running and maintaining generative AI models requires substantial computing power. From training large-scale models to processing complex queries, the demand for high-performance hardware and cloud-based infrastructure can be costly. While some AI tools offer free versions, enterprise-level usage often comes with subscription fees, increased storage costs, and ongoing maintenance expenses. Businesses need to evaluate carefully whether the return on investment justifies these expenditures.

The ability of generative AI to create hyper-realistic content raises ethical and legal questions. Issues like deepfake videos, AI-generated misinformation, and copyright infringement are becoming more prominent. Governments worldwide are introducing regulations to address these concerns, making compliance an essential consideration for businesses adopting AI. Companies must establish clear ethical guidelines to ensure their AI applications align with legal and societal expectations.

Generative AI models often require access to large datasets, which can include sensitive or proprietary information. This raises concerns about data security, regulatory compliance, and potential breaches. With data protection laws like GDPR and CCPA in place, businesses must ensure they are handling user information responsibly and safeguarding against unauthorized access.

Generative AI, while capable, is not perfect. Make sure you have a proper roadmap to successfully implement generative AI. It's important to understand that the accuracy of generative AI depends on the quality of the data it learns from. It's also important to remember that it's not designed to replace human creativity but to help us be more creative and solve problems in new ways.

Even with its potential, there are ethical issues that need to be addressed. We need to think about privacy risks, how data is used, potential biases in the information it creates, and how to use AI-generated content responsibly.

The future of generative AI looks promising, but we need to approach it carefully. By understanding its benefits and limitations, we can make sure it helps everyone.

Ready to integrate AI into your strategy the right way? Start by evaluating your needs, choosing the right tools, and staying updated on industry best practices.

No, AI tools' accuracy depends on the data they are trained on. They can sometimes generate inaccurate or misleading content.

AI is a tool to enhance, not replace, human creativity. It can help generate ideas, but true originality still comes from humans.

Not necessarily. Research shows that the amount of training data is as important as model size for AI performance.

While some basic versions are free, accessing full capabilities often requires a subscription.

Get In Touch

Contact us for your software development requirements

Get In Touch

Contact us for your software development requirements